In recent years, economics has become much more empirical and less theoretical. It is not just that empirical work is more valued than theoretical work. Some economists make the claim that they don’t need theory or that theory is a handicap. They claim to just listen to what the numbers have to say.

Unfortunately, there are a lot of numbers and you can’t listen to all of them. You have to have some method of deciding which numbers to listen to and which to ignore. Theory can play a role in these decisions (here’s Nobel Laureate Lars Hansen defending the role of theory). Unfortunately, so can one’s biases, either ideological or the natural desire to for publication. Then there are the numbers you don’t have because they aren’t collected. We never have data on everything. The silence of uncollected data can be deafening.

With any empirical study, there is always a question of reliability — how robust are the findings? How much do they depend on various assumptions? I want to use a recent study of rise of ridesharing services (Uber and Lyft) to make some observations about the state of empirical research in economics.

Before we look at the study, ask yourself whether you think ridesharing has increased or reduced traffic deaths in the United States. One thought would be that it has reduced traffic deaths. Many traffic deaths involve drunk-driving. Ridesharing makes it easier to drink without driving. But there could be other effects. Ridesharing is said to have increased traffic in many cities. This could lead to more accidents as Uber and Lyft drivers cruise around trying to find fares. The increase in cars on the road could make driving more hazardous for all drivers. Economics has nothing to say about the size of these effects or whether there are other effects that might further complicate expectations and predictions. For example, are Uber and Lyft drivers better or worse drivers than the average driver? I could imagine arguments on either side.

Ultimately then, how Uber and Lyft have affected traffic deaths is an empirical question. The challenge is isolating the effect of the increased availability of ridesharing from other factors that affect traffic deaths.

A new working paper from the Becker-Friedman Institute at the University of Chicago, “The Cost of Convenience: Ridesharing and Traffic Fatalities,” by John Barrios, Yael Hochberg, and Hanyi Yi tries to measure the independent impact of Uber and Lyft. [UPDATE: The original version of the study is no longer up at the Becker-Friedman Institue. I have linked to an earlier version.] They conclude that ridesharing has increased traffic deaths (including pedestrians) per year by 987 deaths — an increase of 3%. The “annual cost in human lives range from $5.33 billion to $13.24 billion per year.” Such, they argue, is the cost of convenience.

Almost 1000 deaths per year. My first reaction was actually shame. Maybe I should reconsider my use of Uber and Lyft. Does it give you pause about your own practice of using ridesharing? Given this increase, should ridesharing be banned or curtailed through taxation or other means? Should you feel guilty enjoying the convenience of Uber and Lyft knowing that the result is a larger chance of death for other riders and pedestrians?

Of course, the actual number maybe be much larger or much smaller. Or even negative. Before deciding on how you or a city should respond to the study’s conclusions you’d want to know something about the accuracy of the study’s findings. It’s a clever study — it exploits the fact that ridesharing did not enter each American city at the same time. In theory, that allows the authors to measure the impact of Uber independently of other factors common to each city.

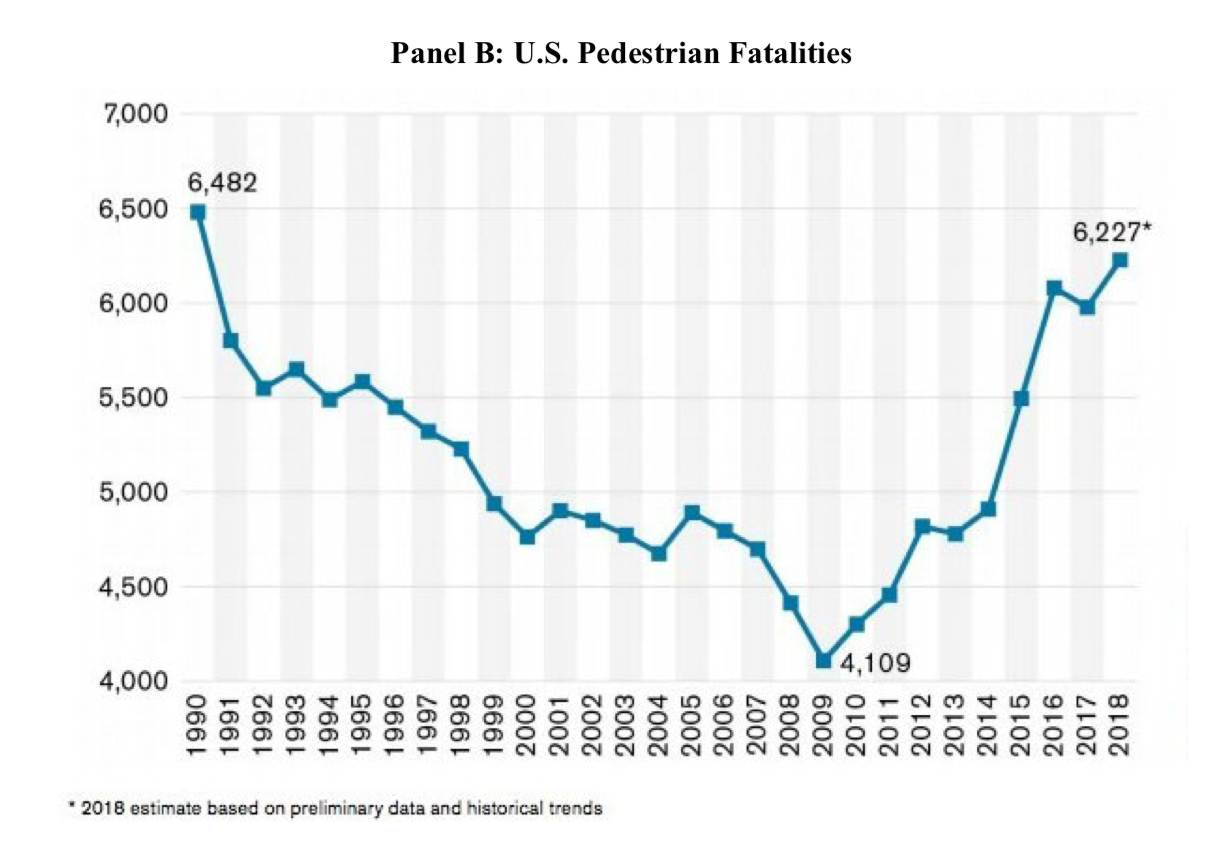

But let’s step back for a minute and look at this chart from the paper on pedestrian fatalities in the United States:

Uber started in 2010 and spread somewhat steadily afterwards along with Lyft to many cities. It seems plausible then, that Uber and Lyft might be responsible for some or all of the roughly 50% increase in pedestrian deaths in the United States in the last decade.

But is it really plausible that ridesharing would increase the deaths of pedestrians? What about ridesharing would lead to the death of more pedestrians? The underlying argument of the study is that increases in traffic and congestion caused by ridesharing lead to more accidents including pedestrians. This isn’t just pedestrians who die in an accident involving Uber or Lyft. This is is all pedestrian deaths from traffic. So in the case of pedestrian deaths — a portion of all traffic deaths — the argument is that the increase in cars on the road due to ridesharing makes it more likely that pedestrians will be killed.

Could be. Of course there is another dramatic change in the US over this time period. By one estimate, smartphone ownership among the US population went from 20% to 70% between 2010 and 2018. Distracted driving could be behind the increase in pedestrian deaths (and traffic accidents more generally.)

So to isolate the effect of ridesharing, you’d want to have good data on trends in smartphone ownership at least by city and ideally among the driving population. Ideally, you’d want information on the proportion of drivers who use their cellphones while driving.

The authors of the study are aware of this problem:

Finally, to capture potential time-and-city varying confounders, such as population changes, increases in employment or income, or smartphone adoption levels (the latter of which may lead to more distracted driving and for which data are unavailable at the city level), we further control for population level and per capita income (which survey data suggest is highly correlated with smartphone adoption and usage).

A confounder means something else besides ridesharing that would affect fatalities. Smartphone or even cellphone use is definitely a cofounder. In some states, using a cellphone while driving is illegal. The norms about using cellphones while driving are changing as well. Teenagers particularly struggle to avoid using their phones while driving. (According to the Department of Transportation, there were are about 3500 traffic deaths per year due to “distracted driving.” I have no idea how they actually try to measure that number. This webpage where I got the number doesn’t seem to say.)

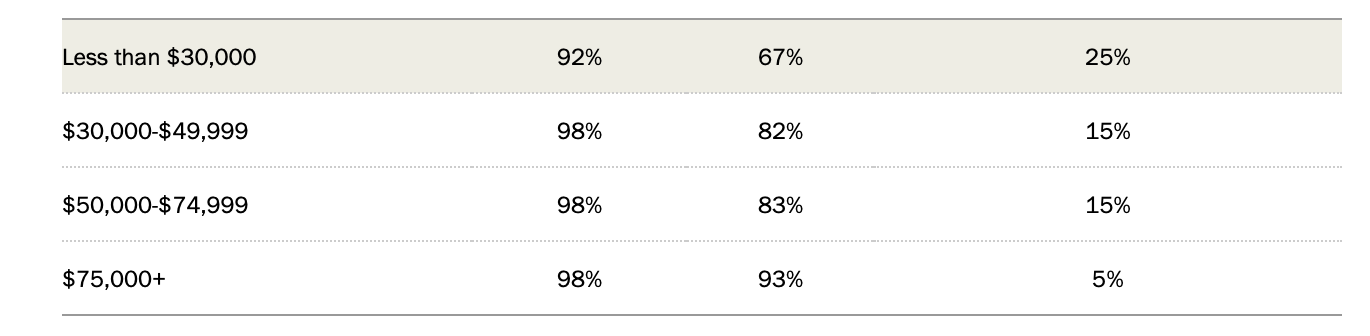

The bottom line is that the ridesharing study doesn’t have data on cellphone ownership or usage while driving by city. So now what? The authors use population and per-capita income as a proxy for the effect of smartphones. They footnote two Pew studies (here and here) as evidence for the high correlation between smartphone adoption and usage with income. Here is a table from one of the Pew studies they cite. The first column is ownership of a cellphone, the middle is ownership of a smartphone, and the third column is a cellphone that is just a cellphone without access to the web:

Any Cellphone, Smartphone, and Just Cellphone Ownership in US, 2018

Any Cellphone, Smartphone, and Just Cellphone Ownership in US, 2018

Yes, phone ownership is correlated with income. But by 2018, almost everyone who would be driving owns some kind of phone. Does per-capita income really do a good job controlling for how drivers used their cellphones in different cities between 2010 and 2018? Might state legislation on using cellphones by driving or norms in different cities or norms that evolve over time and that vary by city make per-capita income a poor proxy for distracted driving?

Given that per-capita income is an imperfect measure of cellphone use while driving, how confident should we be in the claim that ridesharing has killed more pedestrians and led to traffic on the streets that has results in more deaths and injuries from accidents? The worry is that the study attributes deaths caused by cellphone use to ridesharing instead. Is there any meaning to the statement that ridesharing has increased deaths by 987 annually or led to losses of human life with a monetary value of between $5.33 billion and $13.24 billion per year? The actual increase in the measured number of deaths in the study isn’t actually 987. There’s a decimal point. But the authors in a footnote explain that they have rounded the death toll to the nearest whole number. I think “close to 1000” would have been more honest, but then they could not have made a calculation to two decimal points of the monetary losses…

There are some good things about the study. It’s 45 pages long with all kinds of sophisticated analysis to tease out that number of 987. There’s even an appendix that looks at the sensitivity of the results to various assumptions. That’s a great practice. Unfortunately, there is no analysis of how sensitive the results are to using per-capita income as a proxy for distracted driving. There can’t be.

Every econometric analysis suffers from the reality that we don’t have data on everything. One view in response to that reality is that we do the best we can. You mention the shortcomings of your research and allow the reader to draw conclusions about the reliability of the study.

My own reaction is colored by the pleasure I get from using Uber and Lyft and knowing that it has made life easier for many people. But my first reaction to this study really was shame. Traffic deaths have been rising in recent years? Maybe it really is due to ridesharing. I’d forgotten about cellphone use. Given my biases, I now easily conclude that the 987 number is unreliable because it does seem to me that per-capita income isn’t going to do a very good job. Should I reconsider? Is the lack of data on cellphone usage as decisive as I want to think it is?

It would be tempting to say that this is just a working paper. Perhaps it will get no traction. But I doubt it. The Becker-Friedman Institute will spread it around — I only knew about the study because the Institute sent me an email. The media will be eager to repeat the finding because people have strong feelings about Uber and Lyft: “U of Chicago Study Finds Ridesharing Kills 1000 People Each Year.” Taxicab owners and their supporters will cite it.

I close with some questions for the authors of the study and an invitation. How confident are you that your estimate of 987 deaths per year is accurate? I know that you did the best you could given the limitations of the data. But do those limitations dent your confidence? My invitation is that if you disagree with what I’ve written or if I’ve made any mistakes of interpretation, I’m happy to print a response here.